Post Replications!

A follow-up on the acceptance of genetic editing and the benign nature of knowledge

The support for gene therapies and embryo selection among the general public has been growing for many years now. I previously documented how, at this point, they’re at high enough acceptance levels that they’re inoffensive to almost or more than half of the population, with even greater acceptance for more educated people, doctors, and those who need these technologies most. You can read that post here:

Everyone Agrees: Let's Put Genetics to Work

When two scientific papers disagree, controversy invariably ensues — unless papers disagree in the same direction. That’s what happened with Nelson et al. (2015) and King, Davis & Degner (2019).

On a different subject, there are those who say knowledge is power, and there are others who say knowledge is dangerous. The latter camp is less laissez-faire and more dirigisme when it comes to the publication and proliferation of knowledge. They tend to believe that knowledge is risky and we must be careful about the sorts of scientific findings we publish, lest we offend someone or endanger groups of people. I’ve written about this idea in an earlier post in which I found that the risk of knowing about race differences in intelligence was minimal when it came to influencing people’s explicitly or implicitly prejudicial views. You can read that post here:

What does Nick Bostrom think?

You probably don’t know everything Nick Bostrom believes about race just because you know he believes Whites are more intelligent than Blacks.

Another Gene Therapy Survey

My post about public opinion on gene therapies and embryo selection came out on March 14ᵗʰ. On March 24ᵗʰ, a relevant new survey’s results were published in the journal Reproductive Biomedicine Online.

Neuhausser et al. looked into the acceptance of gene therapy and health-related whole-genome embryo sequencing in another population of people who would greatly benefit from these technologies: infertility patients.

This survey was quite useful because it took place in a setting with a reasonable expectation that the characteristics of its clientele would be similar over time and it involved two timepoints. The first measurement of infertility patient attitudes took place in 2018. The sample included 469 people and had a mean age of 41 (37–44). The 2021 sample included 172 people with a mean age of 36 (35–40). In other respects, these samples were highly similar, whether considering ethnicity, race, education, politics, religion, health worker status, parity, miscarriage history, prior infertility treatments and IVF usage, and plans for preimplantation genetic testing. Notably, the 2021 cohort was about twice as likely to report they had a good amount of prior knowledge about gene editing (11% vs 20%) and they were similarly more likely to report they had a good amount of prior knowledge about whole-genome sequencing (9% vs 19%).

Comparing the two cohorts, a few results stand out. For example, there were no differences in acceptance of the use of whole-genome sequencing to increase the chance of a live birth, to predict adulthood diseases, or to predict adult disease conditional on the revealed disease risk of parents. There were also no differences in support for using genetic editing to prevent adulthood diseases or to alter physical characteristics like height. For everything else, the 2021 group was more accepting.

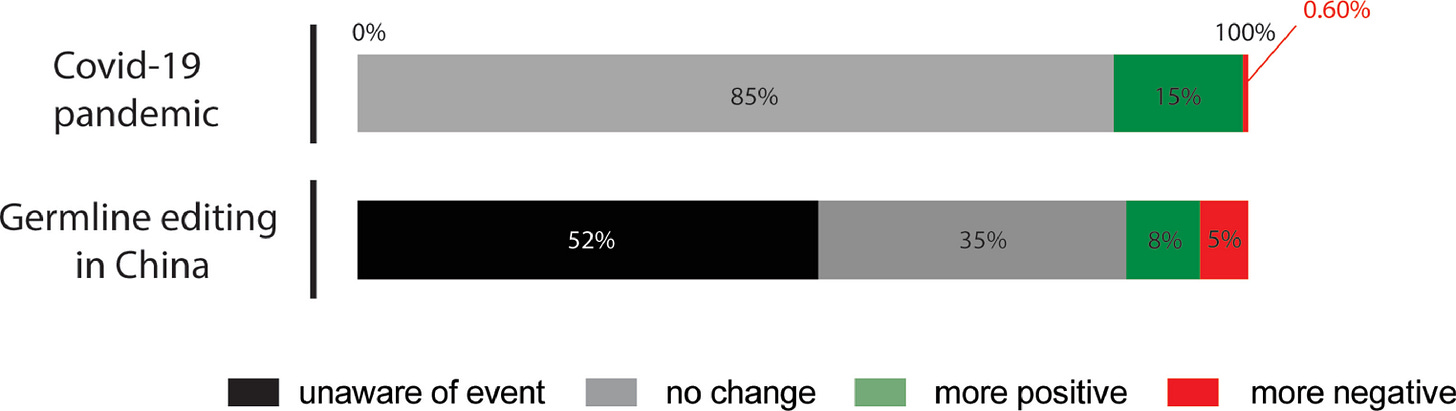

But why? Two hypotheses were tested. These were that views on human genetic editing were affected by

He Jiankui’s CCR5 editing incident.

The COVID-19 pandemic.

Unfortunately, since this was not longitudinal data, actual changes in views and their causes could not be confidently probed.

Finally, there were limited differences by politics, religion, and education. Conservative people tended to be less accepting, and moderate people tended to be more accepting. Agnostics and atheists tended to be more accepting except for enhancement, and Christians were generally less accepting. The more educated people in the sample tended to be more accepting for preventing disease and less accepting for enhancement.

Overall, this new survey result lines up well with the ones I previously documented: it supported the same rising trend in public support for these technologies in recent years, and it documented another instance where populations that could disproportionately benefit already report a fairly high rates of acceptance for many use-cases.

Hypervigilance Towards Scientific Work is Still Uncalled For

My post on the lack of risk associated with the knowledge that there are race differences in intelligence came out on January 26ᵗʰ. On June 1ˢᵗ, two preregistered studies on people’s hypervigilance towards the harms of research were published in Psychological Science.

Clark et al. conducted two studies, and in the first they had a sample of 983 participants read excerpts from five real, peer-reviewed and published scientific studies alongside one discussion section excerpt that was made up for their study. The studies reported

Female protégés benefit more when they have male than female mentors (the "women-mentors" finding)

An absence of evidence for racial discrimination against ethnic minorities in police shootings ("police-discrimination")

Priming people with Christian concepts increases racial prejudice ("Christian-prejudice")

The children of same-sex parents are no worse off than the children of opposite-sex parents ("same-sex parents")

Experiencing child sexual abuse does not cause severe and long-lasting psychological harm for all victims ("child-sexual-abuse")

There's some needed background for using these studies. Let's go by how they're ordered and touch on the first two studies first:

Those two studies were considered more likely to offend people of liberal political sensibilities. Both of them were also retracted, but in neither case was there a strong scientific argument for retracting them. They were retracted for political reasons, and those political reasons had to do with an outcry from left-leaning people about alleged harms from their publication. So these were especially relevant to Clark et al.

The next two studies were considered more likely to offend people of conservative political sensibilities. Neither of them has been retracted, but the first one probably should have been because it's a heavily and obviously p-hacked small sample priming study, and those are the premiere examples of studies that have fallen apart during the replication crisis. The second one is fine; it's been replicated very strongly, so it shouldn't trip anyone up on scientific grounds, and in this day and age, it shouldn’t really offend conservatives that much either.

So far, we have three studies that are scientifically not dubious and one that is, but two were retracted and those retractions didn't include the dubious one. The reason for this pattern is probably obvious and has to do with the academy's political biases: academia is a strongly left-leaning institution. The power within it is held by left-leaning people and their voices are valued above the voices of right-leaning people, with all the expected consequences that would bring.

The last study mentioned above was chosen because it's generally offensive.

Rind, Tromovitch & Bauserman found that the effects of child sexual abuse were overstated because of selection: people from families where child sexual abuse took place were also people from families that were worse off in general. Accounting for this general worseness tended to reduce the effects of child sexual abuse to very little or nothing in most studies.

This is a strong claim, but it replicated. Seven years after it was published, Ulrich, Randolph & Acheson found that "child sexual abuse was found to account for 1% of the variance in later psychological outcomes, whereas family environment accounted for 5.9% of the variance. In addition, the current meta-analysis supported the finding that there was a gender difference in the experience of the child sexual abuse, such that females reported more negative immediate effects, current feelings, and self-reported effects."

I've noted elsewhere that this sort of finding holds up for other outcomes like the effect of self-reported "trauma" on borderline personality disorder. There are also recent studies that show such surprising findings as “even for severe cases of childhood maltreatment identified through court records, risk of psychopathology linked to objective measures was minimal in the absence of subjective reports.” In other words, if someone was clearly traumatized in an objective sense but they didn't feel traumatized, then there were no other negative effects of that objectively-experienced trauma.1

Rind, Tromovitch & Bauserman published their work and noted that it obviously did not say child sexual abuse wasn't bad. It’s clearly bad. The key moral lesson they seemed to want to convey was that moral badness was not contingent on something causing harms like maladjustment, depression, lower IQs, etc. Things can be bad because they are simply bad, regardless of their effects later in time.

Because this study was published more than 20 years ago, it obviously wasn't published in today's "retract-that-paper-absent-a-scientific-reason" heyday, and it didn't get retracted like it probably would today. But, it did receive an incredibly stern reaction: Congress condemned it.

The last excerpt Clarke et al. used was the fake one, and it was explicitly politically unflattering. They randomized participants to receive a prompt saying that either left-wing or right-wing people were more or less intolerant towards the other side, and the other side was more or less fair towards the intolerant group, opposite the intolerant group. It read:

Across three studies, we found that more [left-wing/right-wing] political ideology was associated with more intolerance toward attitudinally dissimilar others. In Study 1, as participants identified as more politically liberal/conservative, they reported less willingness to (1) talk to, (2) listen to, (3) meet, and (4) live by those who did not share their own political ideology. In Study 2, in a democratic decision-making task, political [liberals/conservatives] put less weight on the votes of moderates and [conservatives/liberals] than the votes of [liberals/conservatives], whereas [conservatives/liberals] equally weighted the votes of all ideological group members. Study 3 replicated these patterns in the United Kingdom, Sweden, Hungary, and New Zealand. These results suggest that political [liberals/conservatives] are less able or willing to consider views and values of those who disagree with them than are political [conservatives/liberals].

The design this study used was fairly simple. Participants read excerpts from these studies and were asked their thoughts on if those studies would lead other people to harmful, helpful, more-research, do-nothing, or research-thwarting reactions. After that, they were asked for their own reactions to the studies.

Harmful reactions would be something like “Discourage young females from approaching female mentors,” “Stop investigating cases of police use of force against ethnic minorities,” “Discourage people from joining the Christian faith,” “Devalue families with one mother and one father,” “Take child sexual abuse less seriously,” and “Block [liberals/conservatives] from running for office.”

Reactions asking for more research involved conducting more research to understand why the pattern was found and to design interventions. Helpful reactions involved providing resources and investing in programs to help the group in question. Reactions asking to thwart the research involved banning the research and publicly shaming the associated scholars.

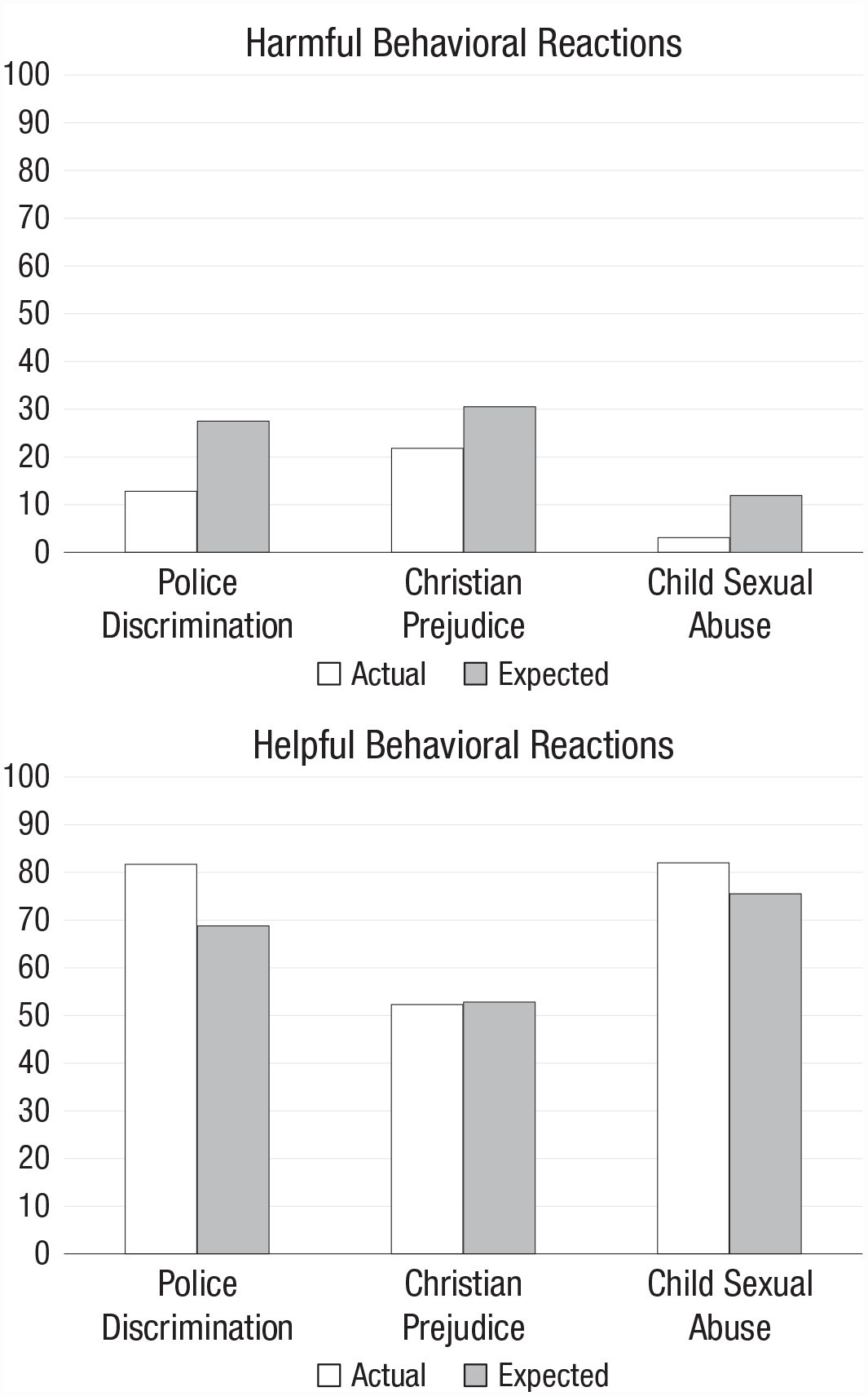

With background out of the way, onto the results. In this diagram, “Actual” denotes the mean response from participants about their own views, whereas “Expected” denotes how they thought others would react. The results for each reaction type paint a clear picture.

In a remarkably consistent fashion, people overestimated how harmful research was. They overestimated the extent to which research would make people negligent, how much it would elicit harm, and how much people wanted to cancel researchers as a result of their work. This overestimate was also always fairly large! The d’s for harmful reaction overestimation ranged between 0.56 and 0.97; for do-nothing reactions, they ranged between 0.45 and 0.68; for thwart-research reactions, they ranged between 0.45 and 0.67.

On the other end, people underestimated positive reactions! Participants underestimated helpful reactions by between -0.23 and -0.50, and they underestimated calls for more research by between -0.15 and -0.46 d.

These patterns of over- and underestimation were generally not related to the perceived offensiveness of the research, and for all excerpts besides same-sex-parents, conservatism was related to more greatly overestimated harms. Conservatives also overrated the risk of thwart-research responses and do-nothing ones, excepting do-nothing reactions for same-sex-parents.

Participants who struggled to understand the excerpts they read also considered findings to be a tad more offensive, with the notable exception of the fake political excerpt, but only when it was specifically about liberal intolerance and conservative tolerance.

Unexpectedly, there were no differences in censoriousness between men and women in this first study, and it was actually younger and more conservative people who wanted more censorship, while education simply wasn’t related to it.

The second study used a sample of 882 people and a subset of the original excerpts. They used the excerpts for police-discrimination, Christian-prejudice, and child-sexual-abuse, and they only dealt with harmful and helpful reactions. The reason they subset the content was because they were going to use a more complicated design and it would have been too unwieldy to run the gamut.

In this second study, they gave participants cash stakes for accurate predictions about other’s beliefs. Clark et al. turned each behavioral reaction into an initiative that they would donate to on the participants’ behalf, and the donations were apparently pretty sizable. For example:

After you read each set of scientific findings, we will present you with five... initiatives to which you can vote ‘yes’ or ‘no’ to allocate $100, for up to $500 total. At the conclusion of the study, if the majority of participants vote ‘yes’ to allocate $100 to any particular initiative, we (the research team) will donate $100 toward that initiative.

Because of the nature of the excerpts, the initiatives would have had to be quite humorous. For example, at one point, they gave people the option to donate to “Encouraging ethnic minorities to take full responsibility for police use of force against them.”

But this wasn’t the source of the incentive for accuracy. This was a ruse! Participants were asked to predict how people would donate and they were incentivized to be accurate by being offered a chance to win one of five $100 Amazon gift cards if they were one of the five most accurate participants. The gift card was not a deception, but the donations on participant’s behalf were. However, only 1.4% of the participants indicated that they thought the donations weren’t real.

So what happened when the participants had incentives for accuracy? The result from the first study replicated! People overestimated harms and, outside of the Christian-prejudice prompt, they underestimated how much this research would push people to say they wanted to help.

Aside from the obvious null, the differences were, again, quite large! Harms were overestimated by between 0.42 and 0.72 d and benefits were underestimated by -0.64 d for police-discrimination and -0.32 d for child-sexual-abuse. But some things did change.

For one, offensiveness was correlated with harmful reaction overestimates in this study for child-sexual-abuse. For two, women were confirmed to be much more censorious. For three, age and ideology no longer related to censoriousness.

Overall, people misunderstood how others would react to studies across an initial study and a replication.

Some useful future directions for this work would be to do a study with completely fake prompts people are randomized to like they did with their fake excerpt prompt. If people react badly to studies that they find to be broadly acceptable, then that might indicate a general tendency to worry and expect the worse even when people might know better.

One critically missing quantity from this study was measurement of belief change. In my post on Nick Bostrom, I found that people who read about Black-White differences in intelligence were significantly more likely to believe Whites were more intelligent afterwards. However, they were still no more racist in other ways. Using the belief change about intelligence differences as an instrument, the only significant change that resulted from the change in intelligence beliefs was that a few participants said that society was spending either enough or too much rather than too little on Blacks. In a sample of intelligence researchers, there was no experiment, but they were simply not racist despite a high level of belief that Whites were smarter and that the differences involved genetics.

To truly understand the harms of belief changes, something like this has to be repeated with more topics, on larger, more representative samples. It might be worthwhile to do this and then to weight harms by how likely people are to believe a given finding. If people never believe a finding, then no matter how bad it may be for a particular group, it’s still unlikely to ever affect them. In fact, one could easily argue that if people were unendingly obstinate in the face of a strongly scientifically supported finding, then it’s more likely the researchers will suffer consequences as a result than the ‘targeted’ group would.

One must also separate cross-sectional belief connections with harms from ones that result from regular people actively learning something. The people who hold crude beliefs cross-sectionally are often selected. For example, in a way samples, I’ve noticed that people who report explicitly racist views are shorter. Their views did not make them shorter, they were simply selected from the population in ways related to height and, quite likely, other traits too. These sorts of descriptives are often good enough to show that generalizations can’t be made with too much haste, since cross-sectional “effects” are often nothing but.

This research also needs to be repeated with narratives rather than research excerpts. The presentation of findings may not prove to be risky, because findings are findings and people form their own narratives about them and those narratives will—at least apparently—tend to be like the people: moderate in character. But, if someone is presented with a narrative—especially one masquerading as a finding—then it may be reasonable to think those could have a far worse effect. For example, the belief that differences between groups are driven by systematic factors resulting from a particular group’s influence may lead people to dislike that group more, even if the people in that group are not involved in any sort of system keeping down the other group. This could result in violence against that group, and it may motivate people to take actions they wouldn’t have without that narrative.

But again, we must be careful about selection. Consider some of the facts documented in the post on Bostrom, like that people who believe Whites are more intelligent than Blacks cross-sectionally are simply less involved in politics. Might people holding radical beliefs and acting on them be selected similarly? Could the narratives they believe and espouse be less a motivating factor and more a rallying one? Could rallying be what enables their beliefs to take on an active character?

More research is needed.

It’s good to see your findings replicated and extended. If you want to see more posts like this and the ones I’ve mentioned here, consider subscribing. I appreciate everyone who does.

This sort of finding obviously excludes the impacts of scenarios of extreme physical abuse, like those that result in brain damage or permanent disfigurement.