The Holistic Judgment Conceit

Holistic evaluations are for machines, not people

This was a timed post. The way these work is that if it takes me more than one hour to complete the post, an applet that I made deletes everything I’ve written so far and I abandon the post. You can find my previous timed post here.

It’s been seventy-one years since the renowned clinical psychologist Paul Meehl published his landmark Clinical Versus Statistical Prediction: A Theoretical Analysis and a Review of the Evidence. This text was a monograph that expanded on a series of lectures Meehl delivered between 1947 and 1950 at the universities of Chicago, Iowa, and Wisconsin, and to the employees of the Veterans Administration Mental Hygiene Clinic in Minnesota. The topic of the lectures can be summarized as follows:

Are we—the members of the psychiatric profession—better at diagnosing patients and predicting their outcomes than simple statistical models?

Ask any clinician this question and the answer will surely be “Of course.” Clinicians are wont to remind people that their field is nuanced, complex, and requires a critical eye to the sorts of things you can only figure out through experience. Contrarily, the data that can be obtained from psychiatric patients lacks the granularity and je ne sais quoi needed to match their cultivated, professional understanding of the subtleties involved in diagnosis, prediction, and treatment recommendation.

Ask Paul Meehl, and he would’ve said “No”, with the data to back it up. Throughout his book1 he consistently argued that clinicians ought to tilt their eyes towards research and therapy, while leaving the prognostication and classification side of psychiatry within the realm of statistics.

Meehl’s argument was based on a bevy of observations, but most notably, that in all but one of twenty studies available to be reviewed at the time, statistical methods performed at least as well and generally better than clinicians: simple statistics beat the psychiatrists eleven times, tied on eight occasions, and lost in just one. It was later discovered that the one study showing superior judgment by clinicians was in error: it was really a win for statistical prediction.2 Eleven years later, Meehl reviewed newer literature and found thirty-three statistical wins, seventeen ties, and another win for clinician judgment (which was—like the first such judgment—later found to be an error).

These findings weren’t colored by disdain for clinicians; Meehl was himself a practicing psychotherapist and, if anything, biased in favor of the exact opposite of the conclusions he arrived at. Nevertheless, his work inspired anger from within his clinical profession, much of which came out as incoherent attacks against him and his colleagues. In later work, Meehl even explicitly pointed out that he had been misrepresented in terms of how dire his conclusions seemed to be for clinicians.3 But time has only vindicated Meehl’s conclusions, and it has done so for what Meehl believed to be a simple matter of psychology:

Why should people have been so surprised by the empirical results? Surely we all know that the human brain is poor at weighting and computing. When you check out at a supermarket, you don’t eyeball the heap of purchases and say to the clerk, “Well it looks to me as if it’s about $17.00 worth; what do you think?” The clerk adds it up. There are no strong arguments, from the armchair or from empirical studies of cognitive psychology, for believing that human beings can assign optimal weights in equations subjectively or that they apply their own weights consistently.4

I think Meehl hit the nail on the head, but he should have gone further: he should have provided a wholesale condemnation of human attempts at holistic judgment.

Humans Don’t Do Holistic Judgments Well

Meehl’s conclusions have withstood the test of time. In a review published 52 years after his work came out, simple statistical models tended to outperform clinicians when it came to predicting and/or diagnosing things as diverse as…5

Brain impairment

Academic performance

Psychiatric diagnoses

Psychotherapy outcomes

Length of psychotherapy

Prognoses

Matching MMPI profiles to persons

Compliance with counseling plans

Lengths of hospital stay

Criminal offending in general and violence specifically

IQ

Suicide attempts

Training performance

Personality characteristics

Adjustment

Marital success

Career satisfaction

Prior hospitalization

Homicidality

Malingering

Lying

Juvenile delinquency

And so on. In this stem-and-leaf diagram, negative effect sizes indicate advantages for statistical prediction:

But, one may argue, these comparisons are unfair to clinicians since they receive less information than the statistical models are provided. This is not true, and in the time between Meehl’s initial monograph and this review, plenty of studies had shown that: even when ample qualitative information was provided, statistical models tended to beat clinicians. The authors of the review addressed this directly, hypothesizing that “the differential accuracy between clinical and statistical prediction would not be affected by the amount of informational cues.”

They were right! Increasing the information available to clinicians didn’t help them; in fact, it did the opposite: more information significantly reduced their judgment quality.

The issue of data causing harm for predictions is not something that only applies within the psychiatric profession. When parole boards employ subjective judgments about recidivism risk, they underperform simple actuarial judgments. Medical students who were initially rejected for subjective reasons performed as well as their peers who didn’t receive a subjective rejection. In a well-known example involving academic achievement, it correlated almost 30% more with a combination of high school rank and aptitude test scores than it did with a combination of high school ranks, aptitude test scores, and counselors’ intuitive judgments about students.6

The human failure to engage in holistic judgments efficaciously also shows up experimentally.

In one experiment I quite like, Kausel, Culbertson and Madrid (KCM) ran a series of three studies that made the issues with holistic judgment abundantly clear. In the first study, they showed information about individual airline ticketing agent hires to 132 people who were responsible for hiring decisions. The 132 people were presented with information about hires' general mental ability (GMA, i.e., IQ/intelligence), their conscientiousness, and the rating the applicant received through an unstructured interview. The interview ratings are based on a 1-to-5 scale, where individuals in the sample only ranged from 2-5 because individuals who earned a 1 were not hired.

In both of this first study's conditions, the decision makers had to use the provided information to figure out which employees out of pairs of presented employees would be more likely to receive the higher performance rating from their supervisor. The first condition provided decision makers with the GMA and conscientiousness information, and the second condition tacked on the rating from the unstructured interview.

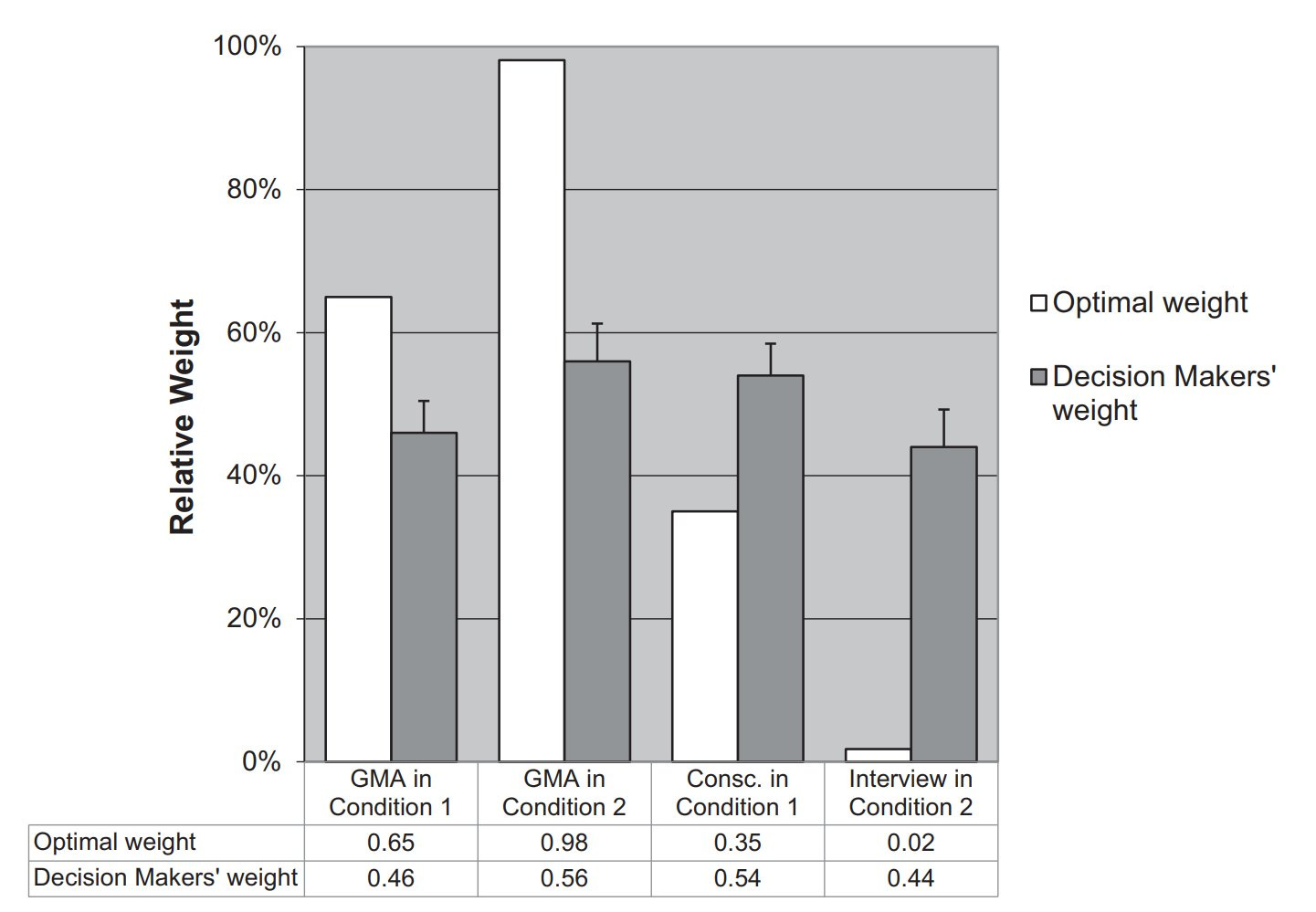

Decision makers did not handle this well. They gave a lot of weight to the results of the unstructured interviews:

The thing that should have been supplied the greatest weight for predicting candidate success was GMA, and it never received more than a bit over a quarter of the weight. Decision makers, instead, relied way too much on conscientiousness in both conditions. But in the condition where data from an unstructured interview was available, they took some weight from GMA and conscientiousness and supplied it to the interview when, in reality, it was worth practically nothing.

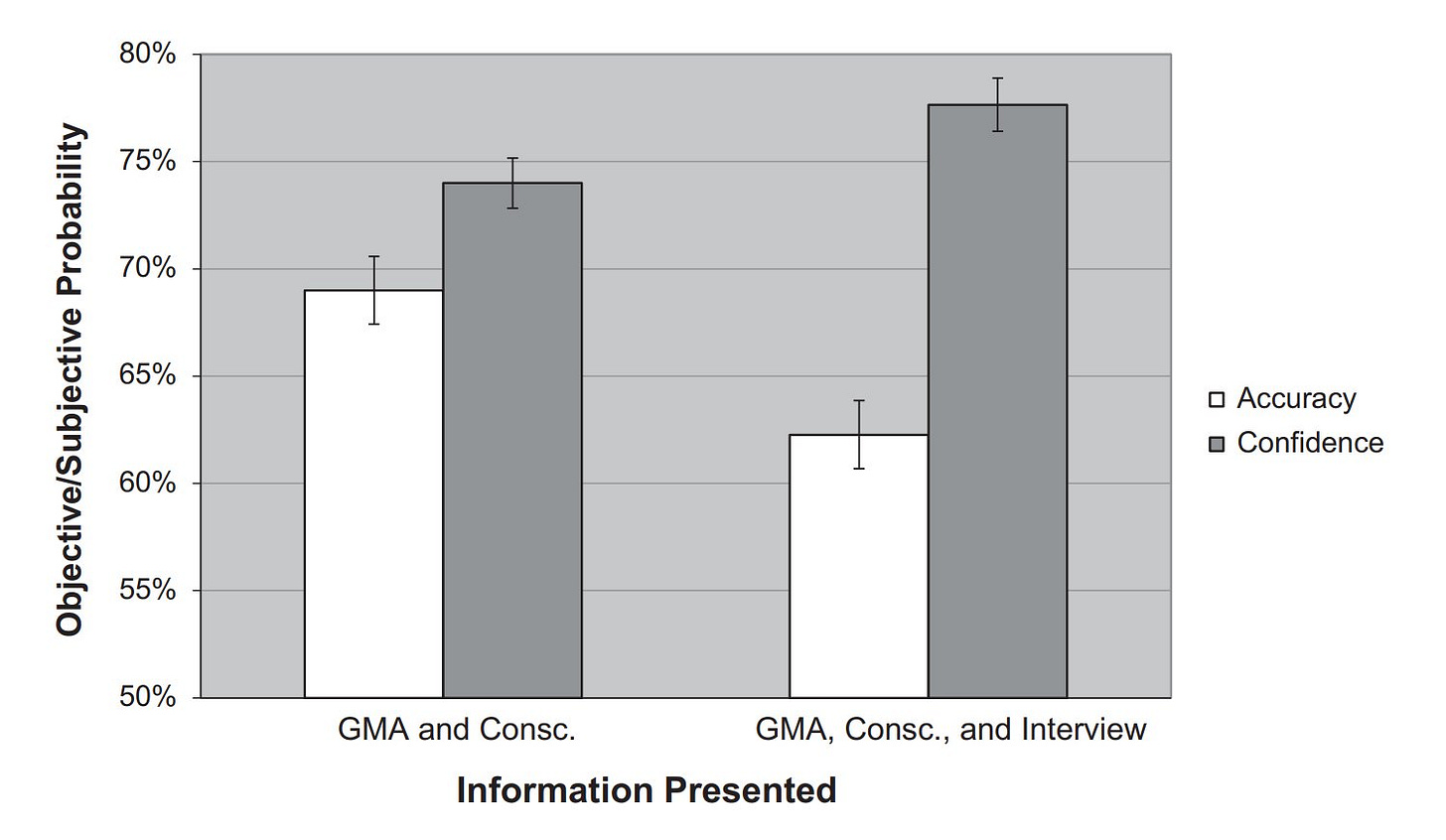

With the interview in hand, decision makers were more confident about their ability to make the right choice. At the same time, they became worse at making choices because they over-weighted the less useful interview information. Confidence up, accuracy down.

The second study used a similar design, but the difference in this case was that, in the first study condition, decision makers had access to GMA and conscientiousness, whereas in the second condition, they lost access to conscientiousness. They provided a bit more weight to intelligence this time around, but they still provided far too much to the interview.

The useful part about this seemingly more constrained design is that it allows evaluating whether decision makers value personality information or the personalities they think they learn about from interviews more. At this point, you might not be surprised to learn that decision makers value the unstructured interview better than the personality measure despite the interview being practically worthless.

In the third study, undergraduate students were offered the ability to compete in predicting performance using the data from the first study. Whoever did the best would get a cash prize. Unfortunately for those students exposed to the interview, the results of the previous studies held up: interviews, once again, made people more confident and less accurate, even when money was on the line.

This study is not without precedent or substantially similar replications. It’s part of a larger literature that tends to support it. Throughout this literature, there are examples aplenty of people being warned about qualitative information—like being told that interview responses are random—but still preferring to have that information rather than to drop it. People use this bad or mediocre information and end up making worse predictions as a result, but for various reasons, they are just biased towards evaluations that include it.7

Unfortunately, expertise doesn’t seem to eliminate this bias either. In Kuncel et al.’s meta-analysis of the literature on mechanical (i.e., statistical) prediction of job and academic performance, they noted:

These was consistent and substantial loss of [predictive] validity when data were combined holistically—even by experts who are knowledgeable about the jobs and organizations in question—across multiple criteria in work and academic settings.

And later:

This finding is particularly striking because in the studies included, experts were familiar with the job and organization in question and had access to extensive information about applicants. Further, in many cases, the expert had access to more information about the applicant than was included in the mechanical combination. Yet, the lower predictive validity of clinical combination can result in a 25% reduction of correct hiring decisions across base rates for a moderately selective hiring scenario. That is, the contribution our selection systems make to the organization in increasing the rate of acceptable hires is reduced by a quarter when holistic data combination methods are used. Yet, this is an underestimate because we were unable to correct for measurement error in criteria or range restriction. Corrections for these artifacts would only serve to increase the magnitude of the difference between the methods.

Machines Do Holistic Judgments Well

Humans do not accurately weight difference pieces of information in their heads to obtain optimal predictions, but machines can. The website interviewing.io provided a simple example of just that. In their example, that compared recruiters’ predictions of job candidate performance to the predictions of a random forest model and an XGBoost model. With the information available to them, recruiters performed worse than the out-of-sample performance of both models, and indeed, not much better than flipping a coin. The “holistic” judgments from XGBoost, on the other hand, were great.

This is not an unusual finding. Where humans lack the ability to parse the meaning of lots of different pieces of information together, a model trained to do that can use that information to produce highly accurate predictions. This is true in many areas. For example, it’s possible to predict the development of various cancers and clinical progression from information contained in the electronic health records of medical patients, leveraging information that doctors often won’t even know is related to the conditions models can predict.8

People Are Accountable For Machines

If you must use a holistic process for selecting job candidates, university admits, people to prioritize for treatment or care, or anything else, instead try to use a real model. It’ll very likely work better than the biased judgments of humans, and it will probably come close to being truly holistic rather than simply useless or instrumental towards an illicit goal, like discriminating in university admissions. The other big benefit is that a model can be audited, whereas human judgment is much harder to interrogate. Auditing human judgment for bias or error is often impractical.

Formatted as a policy suggestion, imagine if universities were required to publish their admissions formulas, as the University of Austin just did. Formulas could be audited for fairness and then universities could be compelled to supply the data required to assess their compliance to the formulae they chose to use. This would eliminate the known biases in holistic admissions (see SFFA v. Harvard) and allow universities to be held accountable by preventing them from lying.

One can dream.

Which I consider his magnum opus. This means a lot given how much excellent work he put out over his long career.

This review was the fourth major accomplishment of Meehl in his book. It was also the locus of the only flat-out error in the book that is known to me. In discussing Hovey and Stauffacher’s (1953) study of clinical versus actuarial MMPI interpretation, Paul did not detect a mistake (pointed out by McNemar, 1955) that the original authors made in computing the degrees of freedom on a test.

See also, this commentary by Goldberg.

Quote:

Some critics asked a question… which I confess I am totally unable to understand: Why would [my colleague and I] be fomenting this needless controversy? Let me state as loudly and as clearly as I can manage, even if it distresses people who fear disagreement, that [my colleague and I] did not artificially concoct a controversy or foment a needless fracas between two methods that complement each other and work together harmoniously. I think this is a ridiculous position when the context is the pragmatic context of decision making. You have two quite different procedures for combining a finite set of information to arrive at a predictive decision. It is obvious from the armchair… that the results of applying these two different techniques to the same data set do not always agree. On the contrary, they disagree a sizable fraction of the time… The plain fact is that [decision makers] cannot act in accordance with both of [two] incompatible predictions. Nobody disputes that it is possible to improve clinicians’ practices by informing them of their track records actuarially. Nobody has ever disputed that that that actuary would be well advised to listen to clinicians in setting up the set of variables.

Though not directly related, I will note something interesting: humans do not mentally do regressions using OLS, they do something more akin to a Deming Regression.

In a book chapter Meehl wrote seven years after his three-decade review of the subject, he noted that his conclusions applied to quantities as diverse as predicting business bankruptcy, longevity, military training success, myocardial infarction, neuropsychological diagnosis, parole violation, police termination, psychiatric diagnosis, violence, and more, which others have since agreed with.

For some additional quotations and details that I found enlightening from this study and some other, related works, see:

For another finding related to this that I found interesting, see this, which showed that first impressions about job candidates aren’t worth much, but they’re substantially correlated with interviewer judgments following longer-form interviews.

Tangentially related to people being bad at using this sort of information: people are bad at describing changes in their own knowledge.

The flip side of clinical prediction getting good is that there are lots of people today who are obsessed with getting as much medical information about themselves as they can, with no intention of feeding it into these models. The direct meanings of every little biomarker might be worth something, but for almost everyone, they won’t be worth much at all for the simple reason that the deficiencies that haven’t turned pathological (i.e., lack of iodine → goiter; lack of vitamin D → rickets, etc.) usually do almost nothing to people.

Goes along with the huge lit on how people are bad at probabilities and normative reasoning generally, make conjunction fallacies etc.

I think that's interesting, but only half the picture: it just means that how we do reason is different from normative accounts in important ways and has different priorities (more pragmatic, social, contextual, anchored to available info, etc).

Task then becomes to work out what human judgements are for, when human judgements can shine best, etc, and how to use human and model best together

For whatever reason, Government is ferociously hostile to evaluating people accurately -- i.e., with objective data and actuarial analysis.

"California ‘No Robo Bosses Act’ would bar AI from making personnel decisions

New legislation in California would prohibit employers from using automated decision-making systems in personnel management tasks." https://statescoop.com/california-no-robo-bosses-act-ai-personnel-decisions-2025/#:~:text=California%20state%20Sen.,termination%20decisions%20without%20human%20oversight.