Eliminating Distractions in Longevity Research

Longevity maximizers should invest in biotechnology, not modifiable lifestyle factors

This post is brought to you by my sponsor, Warp.

This is the sixth in a series of timed posts. The way these work is that if it takes me more than one hour to complete the post, an applet that I made deletes everything I’ve written so far and I abandon the post. Check out previous examples here, here, here, here, and here. I placed the advertisement before I started my writing timer.

How Could We Be So Blind?

VitaDAO is a longevity-focused Decentralized Autonomous Organization that has just funded a study by Pabis et al. that left me stunned. One of the study’s results might overturn 89 years of longevity research, and all it took was checking a meta-analytic moderator.

The research that’s come into question is on the benefits of caloric restriction for lifespan. The idea behind this research is that eating just enough to live supports a longer life. The extent of the benefit on the table here has been thought to potentially be pretty enormous, perhaps even on the order of a 30% or 40% boost to longevity—definitely an attractive idea that’s worth pursuing if your goal is to live a long life, and according to a 2010 review, “the only nongenetic method that extends lifespan in every species studied, including yeast, worms, flies, and rodents.”

Despite there being hundreds of papers on the longevity benefits of caloric restriction, it seems no one bothered to empirically assess if the benefits to the longevity of a given model species were specific to its relatively short- or long-lived strains. In theory, the lifespan extension benefits could be positively, negatively, or not at all correlated with the lifespans of control animals, and if the correlations are positive or negative, that could have serious theoretical implications for the value of caloric restriction.

Pabis et al.’s study looked at the treatment effect of caloric restriction on lifespan for mice, flies, worms, and yeast, and found that, in yeast, there was no benefit, and across the other three species, caloric restriction only had benefits outside of the longest-lived strains. In fact, in the top decile of strains by lifespan, there didn’t seem to be any longevity benefit to caloric restriction. Across species, there were substantial negative correlations between control lifespans and the benefits of caloric restriction.

Caloric restriction clearly has far from universal lifespan benefits in several common model organisms. Instead, what we see when it seems to work is something more akin to ‘catching-up’ among the shortest-lived and likely the most metabolically dysfunctional strains of different animals. This idea is not unprecedented, and several papers have suggested there’s reason to be concerned about the suitability of controls for study. For example, Martin et al. said that some control rodents are “metabolically morbid”, with the possible implication that treatments that help them might not help and may even hurt healthy subjects. Liao et al. found that different mouse strains had lifespans that varied two-to-threefold with free feeding and a stunning six-to-tenfold under caloric restriction. de Magalhães et al. found control lifespans varied considerably and suggested this could bias the estimation of treatment effects. Swindell presented mouse evidence for genotype-dependent effects of caloric restriction that could be so impactful that caloric restriction might just not benefit some strains at all. There are several studies like these, but, to my knowledge, none of them checked the moderator Pabis et al. did.

Acknowledging strain-specific effects it becomes appropriate to ask: How much longevity research has been a waste? How many treatment effects have been about normalizing rather than extending maximum lifespans? Any reasonable answer is going to concede that there’s been a lot of misplaced effort that seems unlikely to materialize into human benefit.

Why Have Human Lifespans Grown?

Globally, people today live longer than ever before due to mortality improvements at all ages. The largest gains have been to child mortality, whereas at the top end, the gains have been much smaller. In effect, survival curves look increasingly rectangularized, like so:

While average lifespans have greatly increased in length, maximum lifespans have failed to move as much due to the continued existence of the exponential increase in mortality with age. The increase in the average lifespan, thus, primarily represents catching-up, much like what was seen in Pabis et al.’s study, where caloric restriction failed to extend the lifespans of the longest-lived animals, while robustly extending the lifespans of short-lived strains.

Are You a Long-Lived Strain?

I suspect that being considered part of a long-lived strain like the unaffected top-decile of animal model strains is both genetic and environmental. If a strain disposed towards being long-lived genetically were placed in a suboptimal environment, it seems likely they wouldn’t live as long. That definitely looks supported based on several of the treatment effects shown in Pabis et al.’s study.

The operative question for humans interested in interventions that might promote longer life is whether the environmental improvements that have promoted survival curve rectangularization have made us a long-lived strain, or whether we’re still so genetically—and perhaps environmentally—deficient that there’s something to be gained from caloric restriction and other low-tech interventions. We don’t have human data demonstrating survival improvements from caloric restriction yet, but we do have data from rhesus monkeys. Luckily, we know the results of those rhesus monkey studies aren’t impacted by typical control strain lifespans because there’s not a lot of monkey husbandry going on.

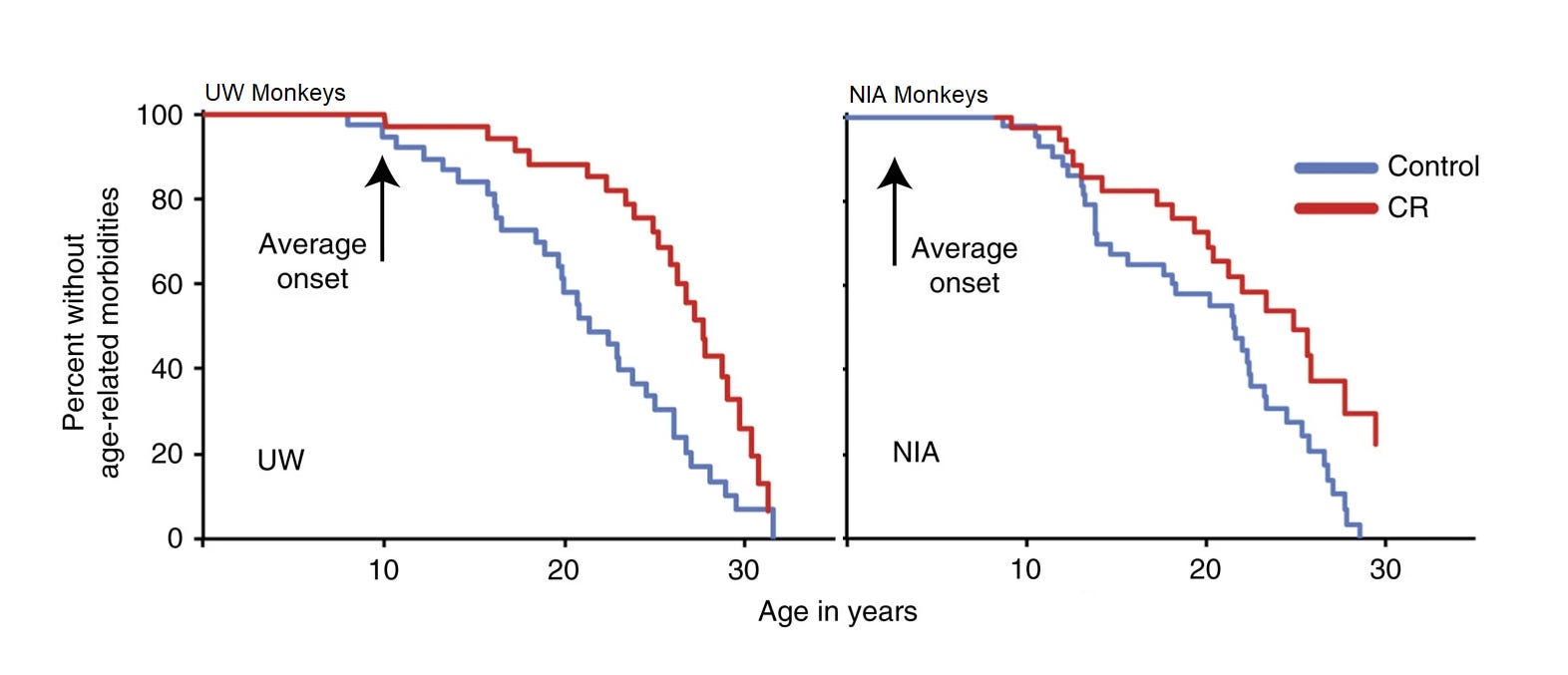

There are currently results from two studies, one from the University of Wisconsin (UW) and another from the National Institute on Aging (NIA). The evidence from these studies seemed to be in disagreement when their respective initial publications came out, and overall, the results do not convincingly support survival benefits from caloric restriction. A summary of both results was provided in a 2017 publication that did provide evidence supporting lower age-related morbidity rates for calorically restricted (CR) monkeys:

It seems logical that improved age-related morbidity would result in longer survival, but this is not something that can be assumed even if it seems likely. It might be something that’s observed at very large sample sizes, but these studies had only modest sample sizes. The UW study had 23 male control-CR pairs and 15 female ones, whereas the NIA study had 10 old and 10 juvenile male pairs along with a set of 12 male control and 10 male CR adolescents, with 9 pairs of juvenile females, 15 female control and 11 female CR adults, and for the old, they had 8 female controls and 7 female CR monkeys.

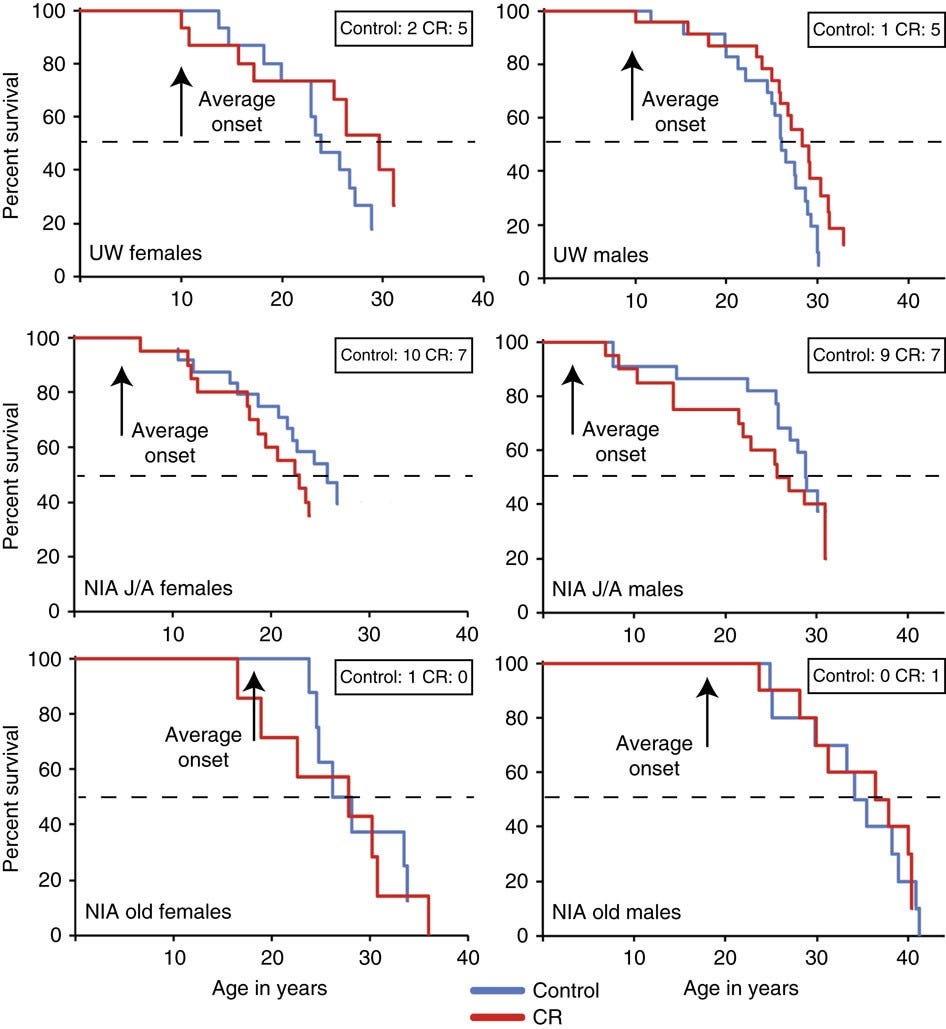

The reason for the age split in the NIA study is theoretical: maybe the age that caloric restriction starts matters for the achieved benefits. Who’s to say a priori? Let’s look:

In the UW study, the age of caloric restriction initiation was moderate, situated between initiation ages of the NIA cohorts. In the UW study, caloric restriction seemed related to substantial life extension, but the p-values split by sex were 0.052 for males and 0.179 for females.1 The middle set of results is for an NIA cohort that had a young age of caloric restriction initiation. In it, controls actually seemed to be doing better than CR monkeys, but again, nonsignificantly, with male and female p-values of 0.351 and 0.511, respectively. The bottom set of results is for a cohort that was already older when caloric restriction was started, and in that case, it just seems like there’s absolutely no evidence for anything—a conclusion supported by extremely unimpressive p-values of 0.687 and 0.489.2 To get a significant result from any of these, you have to combine the male and female UW samples and you find a p-value of 0.0173, which is still incredibly unconvincing, and especially so given the seemingly large size of the effect (HR = 1.865).

If you inverse-variance weight the sex-combined HRs from the Cox Proportional Hazards model you get an estimate of 1.162 with a 95% confidence interval from 0.838 to 1.610. That means that, with the data as it is, caloric restriction was nonsignificantly (p = 0.368) related to longer survival.

import numpy as np

from scipy.stats import norm

def se_from_ci(hr, ci_lower, ci_upper):

return (ci_upper - ci_lower) / (2 * norm.ppf(0.975))

# Data from Mattison et al. 2017, Supplementary Table 1; make sure to exponentiate in the end

hr_values = np.log[1.865, 0.743, 1.017]

ci_lowers = np.log[1.119, 0.433, 0.511]

ci_uppers = np.log[3.108, 1.275, 2.022]

# SEs

se_values = [se_from_ci(hr, ci_l, ci_u) for hr, ci_l, ci_u in zip(hr_values, ci_lowers, ci_uppers)]

# Inverse-variance weights

weights = [1 / se**2 for se in se_values]

# Calculate the HR

weighted_hr = sum(w * hr for w, hr in zip(weights, hr_values)) / sum(weights)

# Calculate the combined SE

combined_se = np.sqrt(1 / sum(weights))

# Calculate the new 95% CI

ci_lower_combined = weighted_hr - norm.ppf(0.975) * combined_se

ci_upper_combined = weighted_hr + norm.ppf(0.975) * combined_se

# Grab the z-value and then get the p-value

z_value = (weighted_hr - 1) / combined_se

p_value = 2 * (1 - norm.cdf(abs(z_value)))The evidence from rhesus monkeys can be contorted in a variety of ways to try to make caloric restriction look like it works or like it doesn’t, but very little can be convincingly demonstrated with this data because there’s just not enough power, so I’ll stick with this meta-analytic HR. To put the low power in perspective, it’s so poor that the statistical interactions by age of initiation aren’t even significant, further justifying my choice to use the meta-analytic HR.

Given the small sample sizes of these studies, I think we can toss the idea that the effect of caloric restriction on lifespan—if there’s anything—is self-evidently huge in primates. We can also tentatively suggest there’s really no good evidence that primates, let alone the oldest-lived—humans—realize lifespan extending benefits from caloric restriction. I’m going to hazard the following suggestion: humans in developed nations and other primates in good conditions will be like the top decile of long-lived model animal strains mentioned by Pabis et al., failing to achieve longer lives with caloric restriction outside of the most obese and at-risk specimens.

So what does work for extending maximum lifespan, and how could we know?

A Message From My Sponsor

Steve Jobs is quoted as saying, “Design is not just what it looks like and feels like. Design is how it works.” Few B2B SaaS companies take this as seriously as Warp, the payroll and compliance platform used by based founders and startups.

Warp has cut out all the features you don’t need (looking at you, Gusto’s “e-Welcome” card for new employees) and has automated the ones you do: federal, state, and local tax compliance, global contractor payments, and 5-minute onboarding.

In other words, Warp saves you enough time that you’ll stop having the intrusive thoughts suggesting you might actually need to make your first Human Resources hire.

Get started now at joinwarp.com/crem and get a $1,000 Amazon gift card when you run payroll for the first time.

Get Started Now: www.joinwarp.com/crem

The 900-Day Rule

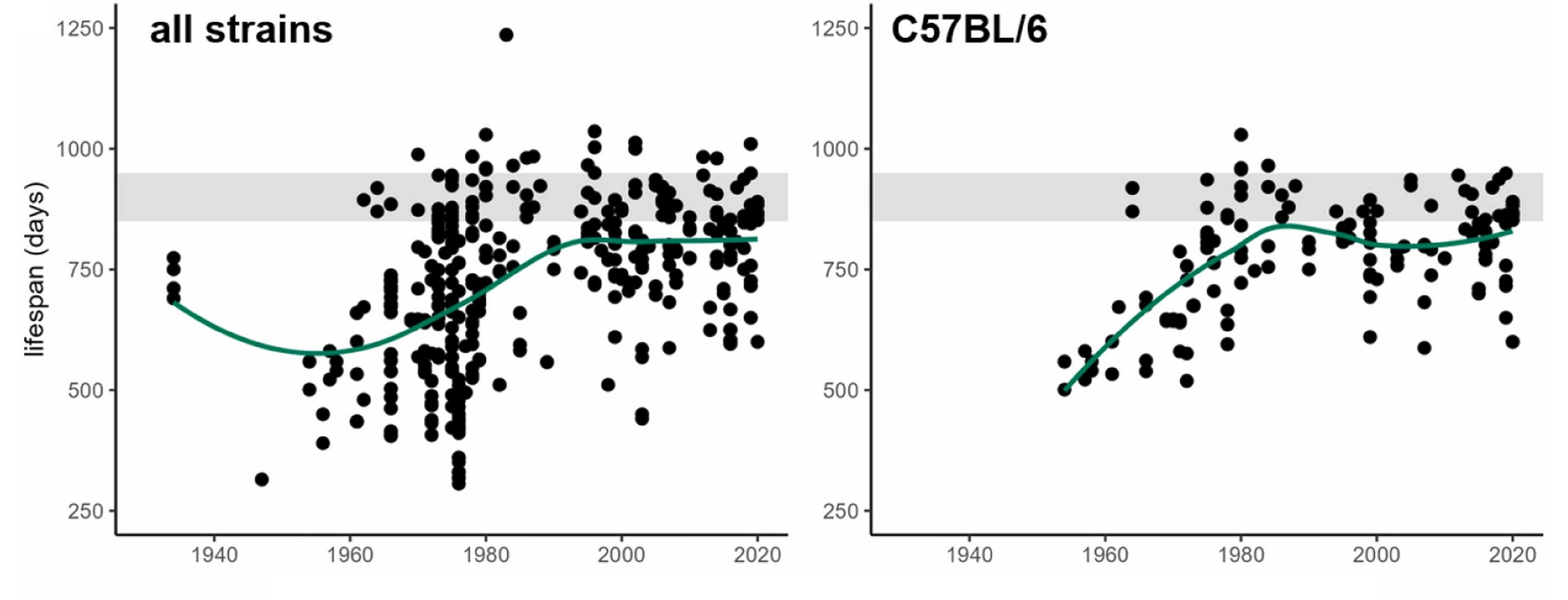

The fact that short-lived strains are desirable for research because they allow it to go faster has polluted the literature because short-lived, convenient-to-research strains produce unreliable results for the very reasons behind their being short-lived. Pabis et al. therefore argued that, in order to demonstrate longevity benefits credibly, strains with long control lifespans in optimal conditions are needed. Luckily, in the late-1970s and early-1980s, improved animal husbandry and environmental quality raised a number of different mouse strains’ lifespans to a plateau of around 800 days.

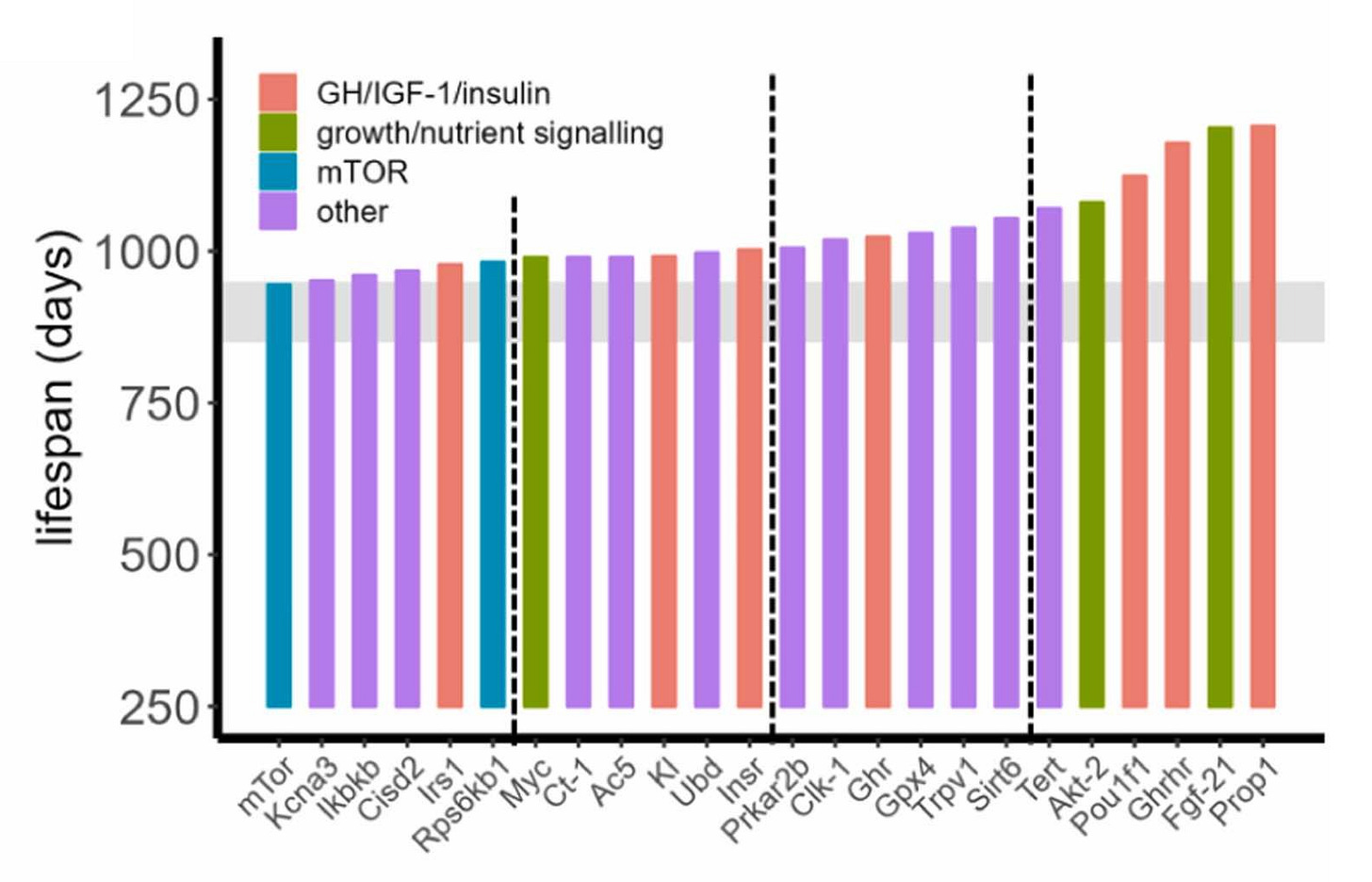

The shaded area you see in this diagram corresponds to a lifespan of between 850 and 950 days, and it corresponds to Pabis et al.’s suggested method to validate longevity treatments in mouse models: use a 900-day rule. This rule—the number of which should be adjusted based on the animal—holds that a longevity treatment “passes” if we see any significant lifespan intervention while control lifespan is 850-950 days, or, failing that, if the final median lifespans of the treated group are ≥950 days.

This suggestion is akin to the p = 0.05 threshold researchers use in other domains. This might be gameable like that threshold, but that seems less likely because this is an objective criteria used as a cutoff. In any case, it’s not a hard-and-fast rule, it’s an empirically-guided and empirically-updateable suggestion that should help researchers to understand if they’re seeing a ‘significant’ improvement in lifespans with a given treatment.

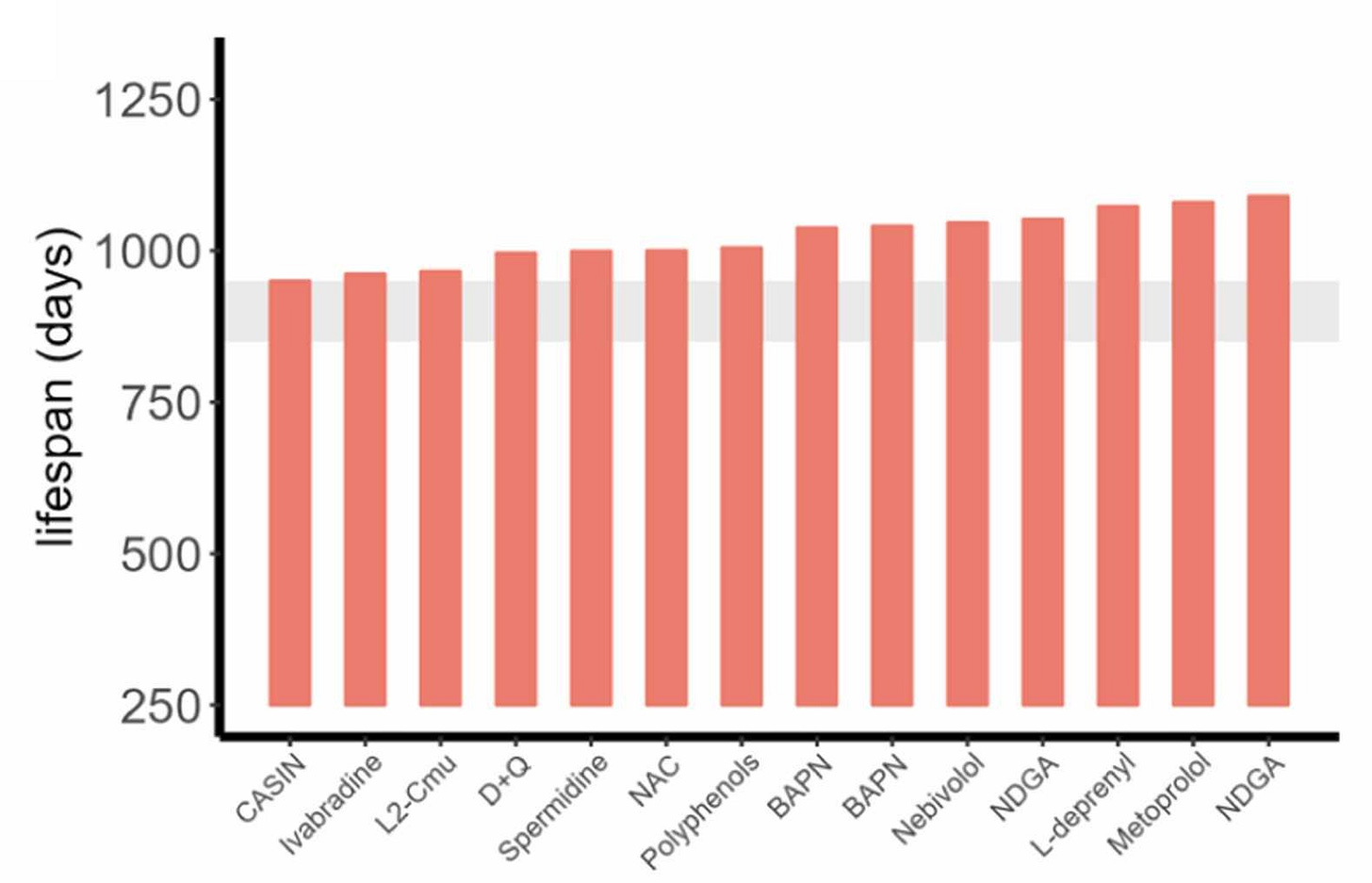

Pabis et al. showed this was possible by using the cutoff to evaluate various longevity treatments. First, twelve different compounds do extend longevity as evaluated with the 900-day rule across fourteen different cohorts:

Second, several genetic interventions appear to extend longevity too:4

These results are all well and neat, but this method also reveals lots that doesn’t work. For example, aspirin, several nicotinamide variants, metformin, resveratrol, and curcumin don’t seem to extend lifespans. Too bad, but at least now we know and, frankly, I think that’s really reassuring for a simple reason: If something doesn’t produce sufficiently radical life extension, at least we now have evidence that we can use to push people to go and study other options!5

Let the search commence!

Stop Sending Checks to Legends

Who’s going to do the search? I can’t say, but I do know a lot of frauds who shouldn’t be involved, and I have a suggestion for excluding other people from funding: I think you shouldn’t send money to scientific legends, the flashiest, most highly-accomplished researchers.

There are a few pieces to my argument. One of them is that high-profile researchers are likely to already be old by the time they’re accomplished, and that means they’ll be individually less creative than their younger peers, all else equal. Unless they’re unusually creative, keep that in mind. If anything, consider sponsoring their graduate students, but maybe wait until they leave the lab so that they’re not under the direction of someone who’s slowed down, someone who’s more likely to be concerned with funding, speaking, and grants than with doing science.

Another argument against funding legends is that they’re too flashy—they don’t focus enough on the simple, unsexy testing that constitutes basic science, data generation, the bread and butter of true discovery. We need lab monkeys willing to eschew fame, because it’s unlikely the work that makes us live longer is going to be sexy from the outset, even if it ends up that way.

A final argument I consider very important is that fraud matters, and accomplished researchers are unfortunately likely to be frauds. They’re famous for a reason, and that all-too-often has to do with doing bad work that makes them look good. That bad work is usually just work that’s negatively impacted by questionable research practices, but often enough, it’s outright fraud. Consider the recent example of the director of the NIA’s neuroscience division, Eliezer Masliah, a fraud. Masliah is one of the world’s top Alzheimer’s and Parkinson’s researchers, and his work has inspired clinical trials aplenty, but it’s undergirded by fabrication and falsification, so much of that effort is a waste.

People who worked with Masliah might not also be frauds, but they’re contaminated by fraud. Their ideas? Maybe wrong! Their research efforts? Maybe wasted! Fraudsters have systemic effects, and Masliah probably had among the most pernicious of fraudster impacts because he influenced so many people through his work, his collaborations, and his control of NIA granting objectives, research direction, and funding opportunities. To put this into perspective, there are 238 active patents concerning neurological conditions that cite suspect work he was involved in.

The material damage this man has done is probably extreme because he was such a superstar in his domain. In recent years, it’s been found that there are tons of other researchers like him. Want to avoid his type? Fund people who aren’t trying to hog the limelight, who want to do basic, incremental research. This is not a sure-fire method to avoid fraudsters, but it’s a suggestion that should at least help to avoid unreliable clout chasers like Masliah and his lesser-known ilk.

What We Have To Do

Want to extend human lifespans? Fund the right research: focus on maximum lifespan extension, rather than lifespan normalization. Want to live forever? Again, focus on maximum lifespan, not improvements within the realm of existing human lifespan variation impacts (like caloric restriction) and mistakenly attacking biomarkers instead of tackling aging itself.

To progress, we have to eliminate causes of death, not just bring up the left tail of longevity and focusing on interventions that operate by doing that. That means we need less focusing on simple interventions like caloric restriction and we need to put much more effort into basic science and biotech.

Using Kaplan-Meier product limit survival analysis instead of Cox Proportional Hazard Regression, the p-values for males and females were 0.049 and 0.174, respectively.

In order, the Kaplan-Meier product limit survival analysis p-values for these results were 0.347, 0.510, 0.687, and 0.487.

0.015 with Kaplan-Meier product limit survival analysis.

Interestingly, of the genetic interventions that extended lifespan, most ended up being gene knock-outs rather than from transgenic mouse models.

If I had to bet, this method reduces but does not fix the problem of insufficient animal-to-human generalization of results. That’s OK. This is at least something, a starting place, and we might be able to substantially overcome the issue by leveraging similar rules in diverse species. If there’s convergent evidence for lifespan extension across many models—rats, monkeys, shellfish, gophers, chipmunks, squirrels, worms, birds, fish, whatever—under an X-day rule, then it’ll be that much more likely that we’ll finally start finding results that generalize to humans too.

It's even worse than you portray. Humans live five times as long as should be expected from their weight -- mice 0.7 times. (Austad, 1991). Also, not only are the rodents metabolically morbid, but all the model lab animals have been overfed for generations, including yeast, flatworms, and fruit flies. As an example of longevity research misattribution, the longevity effects of calorie restriction disappear when done on wild strains, including those with shorter lifespans, like rotifers or butterflies.

I wrote more about the calorie restriction myth here: https://www.unaging.com/calorie-restriction/

Aubrey de Grey poured water on caloric restriction in Ending Aging for theoretical evo reasons, this skepticism just keeps getting validated.

> First, the degree of life extension that has been obtained thus far in various species exhibits a disheartening pattern: it works much better in shorter-lived species than in longer-lived ones. Nematodes, as I mentioned above, can live several times as long as normal if starved at the right point in their development; so can fruit flies. Mice and rats, however, can only be pushed to live about 40 percent longer than normal. This pattern led me, a few years ago, to wonder whether humans might even be less responsive than that, and I quickly realized that there is indeed a simple evolutionary reason to expect just such a thing. It’s a consequence of the fact that the duration of a famine is determined by the environment and is independent of the natural rate of aging of the species experiencing it.